Nikolai Polley

DigiT4TAF -- Bridging Physical and Digital Worlds for Future Transportation Systems

Jul 03, 2025Abstract:In the future, mobility will be strongly shaped by the increasing use of digitalization. Not only will individual road users be highly interconnected, but also the road and associated infrastructure. At that point, a Digital Twin becomes particularly appealing because, unlike a basic simulation, it offers a continuous, bilateral connection linking the real and virtual environments. This paper describes the digital reconstruction used to develop the Digital Twin of the Test Area Autonomous Driving-Baden-W\"urttemberg (TAF-BW), Germany. The TAF-BW offers a variety of different road sections, from high-traffic urban intersections and tunnels to multilane motorways. The test area is equipped with a comprehensive Vehicle-to-Everything (V2X) communication infrastructure and multiple intelligent intersections equipped with camera sensors to facilitate real-time traffic flow monitoring. The generation of authentic data as input for the Digital Twin was achieved by extracting object lists at the intersections. This process was facilitated by the combined utilization of camera images from the intelligent infrastructure and LiDAR sensors mounted on a test vehicle. Using a unified interface, recordings from real-world detections of traffic participants can be resimulated. Additionally, the simulation framework's design and the reconstruction process is discussed. The resulting framework is made publicly available for download and utilization at: https://digit4taf-bw.fzi.de The demonstration uses two case studies to illustrate the application of the digital twin and its interfaces: the analysis of traffic signal systems to optimize traffic flow and the simulation of security-related scenarios in the communications sector.

Fool the Stoplight: Realistic Adversarial Patch Attacks on Traffic Light Detectors

Jun 05, 2025Abstract:Realistic adversarial attacks on various camera-based perception tasks of autonomous vehicles have been successfully demonstrated so far. However, only a few works considered attacks on traffic light detectors. This work shows how CNNs for traffic light detection can be attacked with printed patches. We propose a threat model, where each instance of a traffic light is attacked with a patch placed under it, and describe a training strategy. We demonstrate successful adversarial patch attacks in universal settings. Our experiments show realistic targeted red-to-green label-flipping attacks and attacks on pictogram classification. Finally, we perform a real-world evaluation with printed patches and demonstrate attacks in the lab settings with a mobile traffic light for construction sites and in a test area with stationary traffic lights. Our code is available at https://github.com/KASTEL-MobilityLab/attacks-on-traffic-light-detection.

The ATLAS of Traffic Lights: A Reliable Perception Framework for Autonomous Driving

Apr 28, 2025

Abstract:Traffic light perception is an essential component of the camera-based perception system for autonomous vehicles, enabling accurate detection and interpretation of traffic lights to ensure safe navigation through complex urban environments. In this work, we propose a modularized perception framework that integrates state-of-the-art detection models with a novel real-time association and decision framework, enabling seamless deployment into an autonomous driving stack. To address the limitations of existing public datasets, we introduce the ATLAS dataset, which provides comprehensive annotations of traffic light states and pictograms across diverse environmental conditions and camera setups. This dataset is publicly available at https://url.fzi.de/ATLAS. We train and evaluate several state-of-the-art traffic light detection architectures on ATLAS, demonstrating significant performance improvements in both accuracy and robustness. Finally, we evaluate the framework in real-world scenarios by deploying it in an autonomous vehicle to make decisions at traffic light-controlled intersections, highlighting its reliability and effectiveness for real-time operation.

TLD-READY: Traffic Light Detection -- Relevance Estimation and Deployment Analysis

Sep 11, 2024Abstract:Effective traffic light detection is a critical component of the perception stack in autonomous vehicles. This work introduces a novel deep-learning detection system while addressing the challenges of previous work. Utilizing a comprehensive dataset amalgamation, including the Bosch Small Traffic Lights Dataset, LISA, the DriveU Traffic Light Dataset, and a proprietary dataset from Karlsruhe, we ensure a robust evaluation across varied scenarios. Furthermore, we propose a relevance estimation system that innovatively uses directional arrow markings on the road, eliminating the need for prior map creation. On the DriveU dataset, this approach results in 96% accuracy in relevance estimation. Finally, a real-world evaluation is performed to evaluate the deployment and generalizing abilities of these models. For reproducibility and to facilitate further research, we provide the model weights and code: https://github.com/KASTEL-MobilityLab/traffic-light-detection.

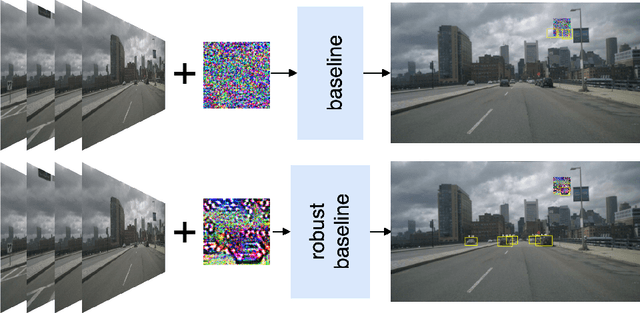

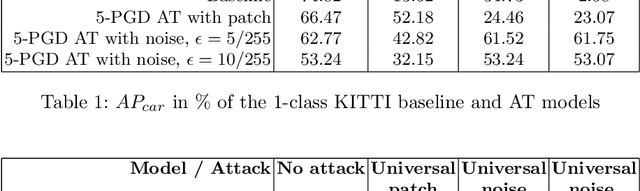

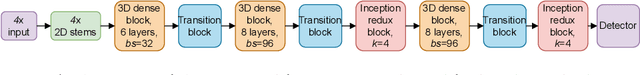

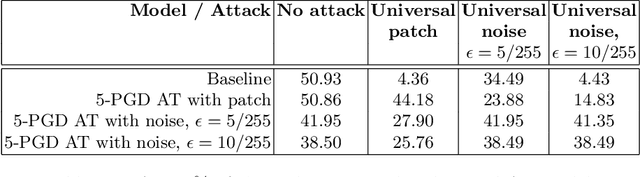

Adversarial Vulnerability of Temporal Feature Networks for Object Detection

Aug 23, 2022

Abstract:Taking into account information across the temporal domain helps to improve environment perception in autonomous driving. However, it has not been studied so far whether temporally fused neural networks are vulnerable to deliberately generated perturbations, i.e. adversarial attacks, or whether temporal history is an inherent defense against them. In this work, we study whether temporal feature networks for object detection are vulnerable to universal adversarial attacks. We evaluate attacks of two types: imperceptible noise for the whole image and locally-bound adversarial patch. In both cases, perturbations are generated in a white-box manner using PGD. Our experiments confirm, that attacking even a portion of a temporal input suffices to fool the network. We visually assess generated perturbations to gain insights into the functioning of attacks. To enhance the robustness, we apply adversarial training using 5-PGD. Our experiments on KITTI and nuScenes datasets demonstrate, that a model robustified via K-PGD is able to withstand the studied attacks while keeping the mAP-based performance comparable to that of an unattacked model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge